During an internal Aicadium learning session, Senior Software Engineer Nicholas Debeurre highlighted the communication challenges of Generative AI, such as bias and misinformation. He explained that GPTs can only rely on their training data and cannot discern between truth and falsehood like humans can.

Keep reading for more insights from the session.

What is generative AI?

Generative AI is a branch of computer science that seeks to replicate the human ability to create original content. It aims to replicate the human ability to create original and creative content. Initially, Generative AI focused on generating synthetic data that was indistinguishable from real data, to overcome the lack of available data. However, with advancements in technology and AI algorithms, the focus of Generative AI has shifted towards generating creative content that is on par with human-generated content.

A brief history

The history of chatbots and generative AI is a fascinating one, marked by many significant breakthroughs and technological advancements.

One of the first chatbots, ELIZA, was created in 1966. It was a rule-based chatbot designed to simulate conversations between a patient and a psychotherapist.

1968 – 1970: SHDRLU, an agent in a block world, was developed, and it could interact using natural language.

1986 : A paper on “serial order: a parallel distributed processing approach” laid the foundation for neural networks, which are now a vital part of many AI solutions.

2000 – 2010s : Technology advanced to the point where we could process the massive amounts of data needed for GPT-like models.

2014: Ian Goodfellow developed GANs (Generative Adversarial Networks), which pit two networks against each other to generate better outputs.

2017: attention-based transformers emerged as a key component of GPTs, which helped to improve the accuracy and efficiency of these models.

2018: both Google and OpenAI implemented these transformers, resulting in the development of BERT at Google and the first version of GPT at OpenAI.

2021: OpenAI released DALL-E, an image generator that can create realistic images from textual descriptions. They have since released DALL-E 2, which builds on the success of the original.

2022: OpenAI released ChatGPT, a language model that has taken the world by storm with its impressive ability to generate high-quality text. ChatGPT has opened up new possibilities for generating content and has garnered significant attention from both the AI community and the general public.

How do GPTs learn?

These large language models, as you can expect, need a lot of text-based data that’s taken in so that they can in turn, spit it back out. When we look at a model like ChatGPT, they’re trained on huge datasets, and they take in things like books, articles, social media conversations, code or any sort of text-based data, and learn from them.

What they’re doing as they take these in is they generate mathematical models for the words and all the texts that they can use with weights, to determine the probability of what the next word is going to be.

One key advantage of modern transformers and other similar models is that they do not require annotated datasets to learn and improve their performance. In contrast to traditional machine learning approaches, these models can learn as they go, which greatly speeds up the training process. This reduces the time and cost required to develop machine learning models, while also enabling them to improve and adapt to new data in real-time.

How do GPTs get graded?

Grading GPTs still requires human input, as computers are not yet capable of determining the quality of their output. One common scenario for grading involves human evaluators assessing the accuracy, relevance, coherence, and other aspects of the model’s generated text. While there are different approaches to grading, human evaluation remains a crucial component in ensuring the quality and effectiveness of GPTs.

An example scenario that used to illustrate some of the variations of this.

Step 1: Create a prompt (i.e how do I bake a cake)

Step 2 : Present to both an AI and a human to receive a response.

Step 3: Responses are presented to another human to review, rate, correct and try to identify how it was generated.

Step 4: All the information used to provide feedback to the models.

There are two common metrics used to evaluate GPTs –

perplexity and coherence.

Perplexity is a quantitative score that measures how well the model predicts words in sequence based on its training text. On the other hand, coherence is a qualitative measure that assesses the flow and sense of the model’s responses while staying on topic.

However, it’s important to note that GPTs do not communicate like humans, which is a topic of concern in the generative AI world. There is a lot of fear mongering around AI and its potential to take over the world. It’s essential to understand that GPTs are still far from human-like in their communication abilities, and their capabilities are limited to generating text based on patterns learned from vast amounts of data.

How do GPTs communicate?

GPTs converse using probability to model the next word in a sequence, with a bit of randomness for variation, resulting in a message generated on-the-fly. However, GPTs lack many of the inputs that humans take for granted. They can’t recreate prosody, which is the rhythm, sound, stress, and intonation of speech, and they can’t understand dialogue beyond just the text portion of it.

In contrast, humans take in multiple input sources in parallel, such as visual and auditory cues, and draw from a vast background knowledge of experiences and expertise. Therefore, it’s no surprise that GPTs can sometimes be completely wrong, as they only have access to one input source.

Language is a tool that has evolved over countless lifetimes and cultures, allowing us to share our internal experiences with others. However, it is far from perfect, and there is often a gap between what a speaker is trying to express and how the audience interprets it. Non-verbal cues and other tools can help bridge this gap, but they are not available to GPTs.

It’s important to recognise that communicating with a GPT or any AI requires a different approach than communicating with a person. GPTs lack the non-verbal cues and contextual understanding that humans possess, making it essential to tailor our communication methods accordingly. As such, we need to adjust our expectations when interacting with GPTs and acknowledge the limitations of their communication abilities.

What other communication challenges do GPT face?

Misinformation – GPTs can inadvertently generate false information, often referred to as “lying” by the media or industry. But it’s not actual lying since there is no intention behind an AI. This is a significant issue in the Generative AI community, and there is currently no clear solution. Some suggest that the current models may be susceptible to this problem.

Resources – GPTs require massive amounts of data to train, which can be prohibitive in terms of hardware costs and memory usage.

Bias – GPTs can also be biased due to the biases of those who trained them, even if unintentional. Human-generated data and media are often coloured by personal beliefs and biases, and these can influence the model’s output.

Copyrights – The use of data to train GPTs raises concerns regarding copyright ownership of the output generated by the models. While the researchers may own the model, the output belongs to someone else, and the use of such output for commercial purposes is a significant issue that needs to be addressed. The lack of clear guidance in this area poses significant societal and political challenges.

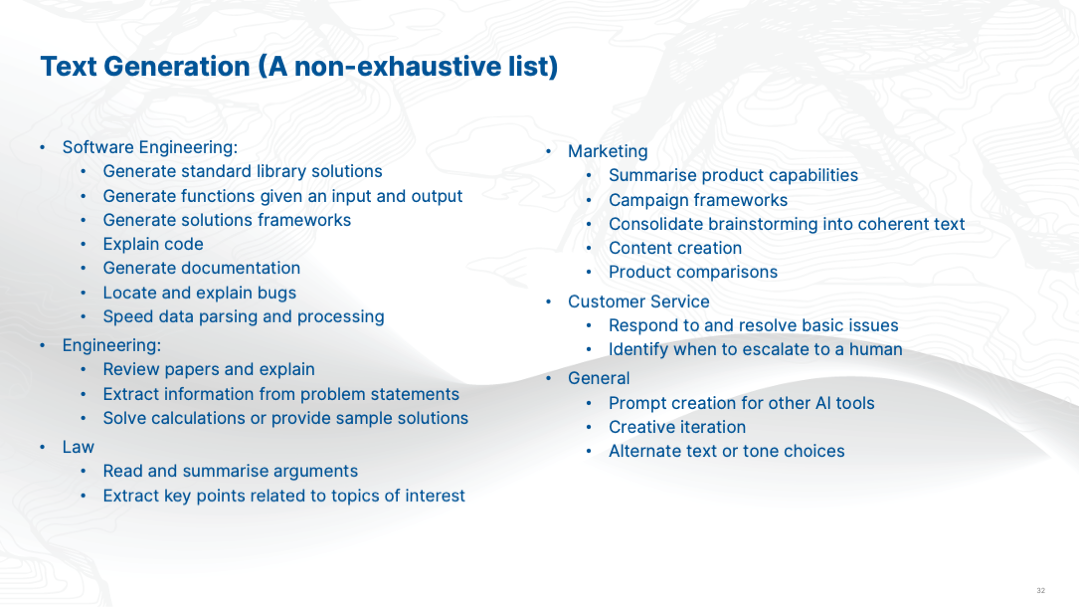

Text generation can augment the capabilities of various fields, rather than replacing humans. It can guide or solve simple issues, such as code explanation or documentation, and function generation. Giving specific constraints produces accurate answers without the risk of errors.

Here are some applications of text generation:

The applications of Generative AI are numerous and varied. From aiding in code explanation to summarising legal cases, GPTs have the potential to augment and enhance various fields. However, it is important to remain aware of the potential pitfalls, such as bias and misinformation, and to continue developing ways to mitigate these risks. As the technology continues to advance, it is crucial that we consider the ethical implications and strive to use Generative AI for the betterment of society.